Chapter 13

Computational Fluid Dynamics (CFD)

"The important thing is not to stop questioning. Curiosity has its own reason for existing." — Albert Einstein

Chapter 13 of CPVR focuses on Computational Fluid Dynamics (CFD) and its implementation using Rust. The chapter begins with an introduction to CFD principles, emphasizing the importance of mathematical modeling and the role of Rust in modern simulations. It delves into discretization methods, exploring finite difference, volume, and element methods, and discusses solving the Navier-Stokes equations, highlighting Rust’s capabilities in implementing these complex algorithms. The chapter also covers turbulence modeling, exploring various models like RANS, LES, and DNS, and discusses the significance of parallel and distributed computing in CFD, providing insights into domain decomposition and load balancing. Finally, the chapter emphasizes the importance of visualization and analysis, offering practical guidance on using Rust for generating and interpreting CFD results.

13.1. Introduction to Computational Fluid Dynamics (CFD)

Computational Fluid Dynamics (CFD) is a vital tool in modern engineering and scientific research, allowing us to simulate and analyze the behavior of fluids under various conditions. At the heart of CFD are the Navier-Stokes equations, which describe the motion of fluid substances. These equations are derived from the fundamental principles of fluid mechanics, including the conservation of mass, momentum, and energy. The Navier-Stokes equations are pivotal because they capture the essence of fluid dynamics, governing both incompressible and compressible flows across different flow regimes, such as laminar and turbulent flows.

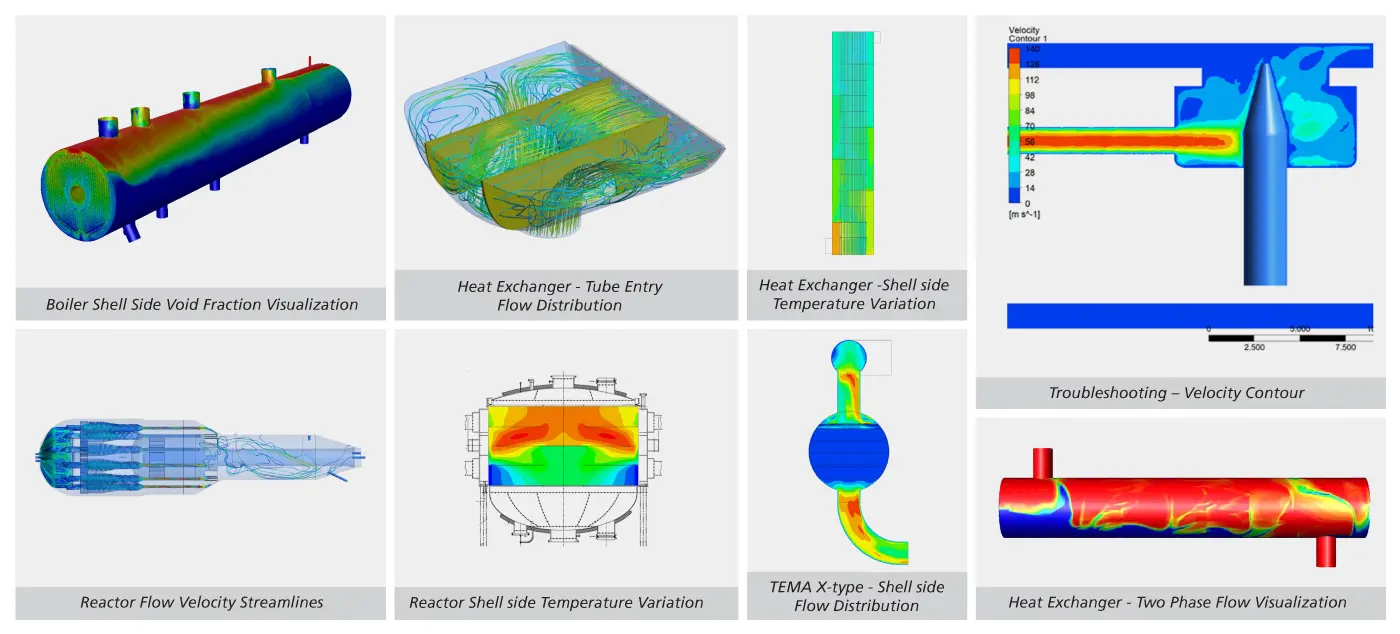

Illustration of Computational Fluid Dynamic use cases.

Understanding the historical context of CFD is crucial. The evolution of CFD from simple analytical solutions to complex numerical simulations reflects advancements in computational power and numerical methods. Initially, fluid dynamics problems were solved using simplified assumptions and analytical techniques. However, with the advent of modern computers and sophisticated algorithms, it became possible to numerically solve the Navier-Stokes equations, enabling the simulation of realistic fluid flow scenarios. This shift has had a profound impact on various fields, including aerodynamics, weather forecasting, and industrial process design, making CFD an indispensable part of computational physics.

Mathematical modeling of fluid flow is the cornerstone of CFD. The Navier-Stokes equations, which are partial differential equations (PDEs), describe how the velocity field of a fluid evolves over time due to various forces. These equations are highly nonlinear, especially when modeling turbulent flows, making them challenging to solve analytically. Instead, numerical methods are employed to discretize these equations, transforming them into a form that can be solved computationally.

Incompressible flows, where the fluid density remains constant, and compressible flows, where the density can vary, are both handled by the Navier-Stokes equations, but with different complexities. Incompressible flow simulations often involve solving the continuity equation to ensure mass conservation, while compressible flow simulations require additional considerations for pressure and temperature variations. The distinction between laminar and turbulent flow is also crucial. Laminar flow is smooth and orderly, while turbulent flow is chaotic and characterized by vortices. The mathematical models for these flows differ significantly, requiring different approaches in their simulation.

Boundary conditions are another critical aspect of CFD. They define how the fluid interacts with the surrounding environment, such as walls, inlets, and outlets. Common types of boundary conditions include Dirichlet conditions, where the value of the flow variable is specified at the boundary, and Neumann conditions, where the gradient of the variable is specified. The choice and implementation of boundary conditions significantly impact the accuracy and stability of CFD simulations. Inaccurate or poorly implemented boundary conditions can lead to non-physical results, such as artificial oscillations or incorrect flow patterns.

Rust, a systems programming language known for its performance and safety features, is particularly well-suited for implementing CFD applications. Rust’s memory safety guarantees, enforced through its ownership model, help prevent common programming errors such as null pointer dereferencing or data races, which are critical concerns in high-performance computing environments like CFD. Additionally, Rust’s concurrency model, which emphasizes safe and efficient parallel execution, is advantageous for CFD simulations that require the handling of large datasets and complex computations across multiple processors.

To begin with, setting up a Rust environment for CFD involves choosing the appropriate libraries and tools. Libraries like nalgebra for linear algebra operations, ndarray for handling multi-dimensional arrays, and plotters for basic visualization are essential components of a Rust-based CFD toolkit. These libraries offer the necessary functionality to implement the mathematical models and numerical methods used in CFD.

Consider a simple example where we implement a 2D incompressible flow solver using Rust. The Navier-Stokes equations for incompressible flow can be discretized using a finite difference method (FDM). The pressure Poisson equation is solved iteratively, and the velocity field is updated based on the computed pressure.

use ndarray::{Array2, s};

use ndarray_linalg::solve::Inverse;

// Function to initialize the velocity field

fn initialize_velocity(nx: usize, ny: usize) -> (Array2<f64>, Array2<f64>) {

let u = Array2::<f64>::zeros((nx, ny));

let v = Array2::<f64>::zeros((nx, ny));

(u, v)

}

// Function to solve the pressure Poisson equation

fn solve_pressure_poisson(p: &mut Array2<f64>, b: &Array2<f64>, dx: f64, dy: f64, niters: usize) {

let nx = p.shape()[0];

let ny = p.shape()[1];

for _ in 0..niters {

let p_old = p.clone();

for i in 1..nx-1 {

for j in 1..ny-1 {

p[[i, j]] = ((p_old[[i+1, j]] + p_old[[i-1, j]]) * dy.powi(2) +

(p_old[[i, j+1]] + p_old[[i, j-1]]) * dx.powi(2)) /

(2.0 * (dx.powi(2) + dy.powi(2)));

}

}

// Apply boundary conditions (Dirichlet or Neumann)

p.slice_mut(s![.., 0]).fill(0.0); // p = 0 at the left boundary

p.slice_mut(s![.., ny-1]).fill(0.0); // p = 0 at the right boundary

}

}

In this code, we start by defining the velocity field as a zero-initialized 2D array using the ndarray crate. The initialize_velocity function sets up the initial conditions for the simulation. The core of the CFD solver is in the solve_pressure_poisson function, where we iteratively solve the pressure Poisson equation. This function takes in the pressure field p, the source term b, the grid spacing dx and dy, and the number of iterations niters. The pressure field is updated based on the finite difference discretization of the Poisson equation.

Boundary conditions are applied after each iteration. In this example, we enforce Dirichlet boundary conditions by setting the pressure to zero at the boundaries. This simple example demonstrates the fundamental process of setting up and solving a CFD problem using Rust. The ndarray crate provides a powerful and flexible way to manage the multi-dimensional arrays that represent our fluid flow variables, while the ownership and borrowing principles of Rust ensure that our code remains safe and free of common concurrency issues.

By leveraging Rust’s strengths in safety, performance, and concurrency, we can build robust and efficient CFD applications that are both easy to maintain and capable of handling the complex computations required for modern fluid dynamics simulations.

13.2. Discretization Methods for Fluid Dynamics

Discretization is a core concept in Computational Fluid Dynamics (CFD), involving the conversion of continuous fluid dynamics equations, such as the Navier-Stokes equations, into discrete forms that can be solved numerically. This process is essential because it enables the simulation of fluid flow over complex geometries and under various boundary conditions using computers. Discretization involves breaking down a continuous domain (such as a physical region where fluid flows) into a finite set of discrete points, called a grid or mesh. At each grid point, the governing equations of fluid dynamics are approximated by algebraic equations that can be solved using numerical methods.

Three primary methods are commonly used for discretization in CFD: the Finite Difference Method (FDM), the Finite Volume Method (FVM), and the Finite Element Method (FEM). Each of these methods has its strengths and weaknesses, making them suitable for different types of problems and applications.

The Finite Difference Method (FDM) is one of the simplest and most widely used discretization techniques. It involves approximating derivatives in the governing equations by differences between function values at adjacent grid points. FDM is particularly effective for problems with simple geometries and structured grids, where the grid points are aligned in a regular pattern.

The Finite Volume Method (FVM) is another popular approach, especially in engineering applications. It involves dividing the domain into small control volumes and applying the conservation laws (e.g., conservation of mass, momentum, and energy) over each control volume. FVM is highly effective for problems involving complex geometries and unstructured grids because it ensures the conservation of physical quantities across control volumes.

The Finite Element Method (FEM) is a more advanced and versatile method that is particularly well-suited for problems involving complex geometries and boundary conditions. In FEM, the domain is divided into small elements, and the governing equations are formulated as a system of algebraic equations using a variational approach. FEM is widely used in structural mechanics and is increasingly being applied in fluid dynamics due to its flexibility and accuracy.

The process of grid generation is crucial in CFD, as the quality and structure of the grid directly influence the accuracy and stability of the simulation. Structured grids are characterized by a regular arrangement of grid points, which simplifies the implementation of numerical methods like FDM. However, they are less flexible when dealing with complex geometries. Unstructured grids, on the other hand, consist of irregularly arranged grid points, making them more adaptable to complex geometries but also more challenging to implement and solve.

Stability and convergence are critical concepts in numerical simulations. Stability refers to the behavior of the numerical solution as the simulation progresses over time. A stable numerical method ensures that errors do not grow uncontrollably, leading to a physically meaningful solution. Convergence, on the other hand, refers to the tendency of the numerical solution to approach the exact solution as the grid is refined (i.e., as the number of grid points increases). Ensuring both stability and convergence is essential for obtaining reliable results in CFD.

Boundary layers and shock waves present additional challenges in discretized models. The boundary layer is a thin region near a solid surface where the fluid velocity changes rapidly, requiring fine grid resolution to capture accurately. Shock waves, which occur in compressible flows, involve sudden changes in pressure and density, making them difficult to resolve without introducing numerical artifacts. Special techniques, such as adaptive mesh refinement, are often used to handle these phenomena effectively.

Implementing the Finite Difference Method (FDM), Finite Volume Method (FVM), and Finite Element Method (FEM) in Rust requires a solid understanding of both the numerical methods themselves and the data structures used to represent the grid and solution variables. Rust's strengths in memory safety and concurrency make it a suitable choice for implementing these methods, particularly for large-scale simulations.

Let's start by implementing a simple 1D heat equation solver using the Finite Difference Method (FDM) in Rust. The heat equation is a parabolic partial differential equation that describes how heat diffuses through a medium over time. The equation can be written as:

$$\frac{\partial u}{\partial t} = \alpha \frac{\partial^2 u}{\partial x^2}$$

where $u(x, t)$ is the temperature distribution, $\alpha$ is the thermal diffusivity, and $x$ and $t$ are the spatial and temporal coordinates, respectively. To discretize this equation using FDM, we approximate the derivatives with finite differences:

$$\frac{u_i^{n+1} - u_i^n}{\Delta t} = \alpha \frac{u_{i+1}^n - 2u_i^n + u_{i-1}^n}{\Delta x^2}Δt$$

This can be implemented in Rust as follows:

use ndarray::Array1;

fn initialize_temperature(nx: usize) -> Array1<f64> {

Array1::from_elem(nx, 0.0) // Initialize the temperature array with zeros

}

fn update_temperature(u: &mut Array1<f64>, alpha: f64, dt: f64, dx: f64) {

let nx = u.len();

let mut u_new = u.clone();

for i in 1..nx-1 {

u_new[i] = u[i] + alpha * dt / dx.powi(2) * (u[i+1] - 2.0 * u[i] + u[i-1]);

}

*u = u_new; // Update the temperature array

}

fn main() {

let nx = 100; // Number of grid points

let alpha = 0.01; // Thermal diffusivity

let dx = 1.0 / (nx - 1) as f64; // Spatial step size

let dt = 0.0005; // Time step size

let mut u = initialize_temperature(nx);

for _ in 0..1000 {

update_temperature(&mut u, alpha, dt, dx);

}

// Output or visualize the results

println!("{:?}", u);

}

In this example, we start by initializing the temperature distribution using the ndarray crate, which provides a convenient way to handle multi-dimensional arrays in Rust. The initialize_temperature function creates an array of size nx (the number of grid points) and fills it with zeros, representing an initial uniform temperature distribution.

The update_temperature function implements the core of the FDM-based solver. It calculates the new temperature at each grid point using the finite difference approximation of the heat equation. The loop iterates over the internal grid points (excluding the boundaries) and updates the temperature based on the temperatures of the neighboring points. The new temperature values are stored in a temporary array u_new, which is then copied back to the original array u to update the solution.

The main function sets up the simulation parameters, including the number of grid points nx, the thermal diffusivity alpha, the spatial step size dx, and the time step size dt. It then initializes the temperature array and runs the simulation for a specified number of time steps. The final temperature distribution is printed out, which can be visualized or further analyzed.

This example illustrates the basic process of implementing a simple CFD solver using the Finite Difference Method in Rust. Rust's ownership model ensures that the memory used for the temperature array is safely managed, preventing issues like dangling pointers or data races. The use of the ndarray crate simplifies the handling of numerical data, making the code both efficient and easy to understand.

Moving on to the Finite Volume Method (FVM), a common application involves solving the 2D incompressible Navier-Stokes equations on a structured grid. The FVM approach involves dividing the domain into control volumes and applying the conservation laws to each control volume. Here’s a simplified implementation for updating the velocity field using FVM:

use ndarray::{Array2, s};

fn update_velocity(u: &mut Array2<f64>, v: &mut Array2<f64>, p: &Array2<f64>, dx: f64, dy: f64, dt: f64) {

let (nx, ny) = u.dim();

for i in 1..nx-1 {

for j in 1..ny-1 {

u[[i, j]] = u[[i, j]] - dt * (p[[i+1, j]] - p[[i, j]]) / dx;

v[[i, j]] = v[[i, j]] - dt * (p[[i, j+1]] - p[[i, j]]) / dy;

}

}

}

fn main() {

let nx = 50;

let ny = 50;

let dx = 1.0 / (nx as f64 - 1.0);

let dy = 1.0 / (ny as f64 - 1.0);

let dt = 0.01;

let mut u = Array2::<f64>::zeros((nx, ny));

let mut v = Array2::<f64>::zeros((nx, ny));

let p = Array2::<f64>::ones((nx, ny));

update_velocity(&mut u, &mut v, &p, dx, dy, dt);

// Further processing or visualization of the results

}

In this code, we define the update_velocity function to update the velocity fields u and v based on the pressure field p. The pressure gradient is computed and used to update the velocities in both the x and y directions. The loop iterates over the interior grid points, excluding the boundary points where special treatment is needed for the boundary conditions.

Finally, the Finite Element Method (FEM) is implemented differently, using a mesh of elements (typically triangles in 2D or tetrahedra in 3D). The FEM formulation involves defining shape functions over each element and assembling the global stiffness matrix, which can be solved to obtain the solution variables. While FEM implementations are more complex, Rust's nalgebra crate can be used to handle the linear algebra involved in assembling and solving the system of equations.

These examples highlight how Rust's capabilities—particularly its safety, concurrency, and efficient memory management—can be leveraged to implement robust and high-performance CFD solvers using various discretization methods. Rust's ecosystem, including libraries like ndarray and nalgebra, provides powerful tools for handling the numerical computations required in these simulations, allowing for the effective simulation of fluid dynamics in both simple and complex scenarios.

13.3. Solving the Navier-Stokes Equations in Rust

The Navier-Stokes equations form the foundation of fluid dynamics, describing how the velocity field of a fluid evolves over time due to forces like pressure, viscosity, and external influences. These equations are central to Computational Fluid Dynamics (CFD) because they model the flow of fluids in various scenarios, from simple laminar flows to complex turbulent flows. The Navier-Stokes equations are a set of nonlinear partial differential equations (PDEs) that combine the principles of conservation of mass, momentum, and energy. The complexity of these equations arises from their nonlinearity and the coupling between different components of the velocity field, which makes them challenging to solve analytically.

In a typical form, the Navier-Stokes equations for an incompressible fluid in three dimensions are expressed as:

$$\frac{\partial \mathbf{u}}{\partial t} + (\mathbf{u} \cdot \nabla)\mathbf{u} = -\nabla p + \nu \nabla^2 \mathbf{u} + \mathbf{f}$$

$$\nabla \cdot \mathbf{u} =0 $$

where u\\mathbf{u}u is the velocity vector, ppp is the pressure, ν\\nuν is the kinematic viscosity, and f\\mathbf{f}f represents external forces such as gravity. The first equation represents the conservation of momentum, while the second equation enforces mass conservation by ensuring the flow is divergence-free (incompressible).

Given the complexity of the Navier-Stokes equations, direct analytical solutions are typically not feasible except in very simplified cases. Therefore, numerical methods are employed to solve these equations, often involving the linearization and discretization of the equations. Linearization is a technique used to simplify the nonlinear terms in the Navier-Stokes equations, making them more tractable for numerical methods. Common linearization techniques include approximating the convective term $u(\mathbf{u} \cdot \nabla)\mathbf{u}$ using known values from previous time steps or iterations.

Two widely used algorithms for solving the discretized Navier-Stokes equations in CFD are the SIMPLE (Semi-Implicit Method for Pressure Linked Equations) and PISO (Pressure Implicit with Splitting of Operators) algorithms. The SIMPLE algorithm is a popular method for solving steady-state incompressible flows. It iteratively corrects the pressure and velocity fields to ensure that the continuity equation is satisfied. The PISO algorithm, on the other hand, is more suitable for transient problems and involves an additional pressure correction step to enhance the accuracy and stability of the solution.

In the SIMPLE algorithm, the process begins with an initial guess for the pressure and velocity fields. The momentum equations are then solved to obtain an intermediate velocity field. This velocity field typically does not satisfy the continuity equation, so a pressure correction equation is derived and solved to adjust the pressure field. The velocity field is then corrected based on the new pressure, and the process is repeated until convergence is achieved.

Implementing a Navier-Stokes solver in Rust involves several steps, including setting up the grid, discretizing the equations, implementing the SIMPLE or PISO algorithm, and optimizing the code for performance. Rust’s features, such as its ownership model and concurrency capabilities, make it well-suited for this task, ensuring memory safety and efficient parallel execution.

Let’s implement a basic 2D Navier-Stokes solver using the SIMPLE algorithm in Rust. We start by defining the grid and initializing the velocity and pressure fields. The equations are then discretized using the Finite Difference Method (FDM), and the SIMPLE algorithm is applied to iteratively solve for the velocity and pressure fields.

use ndarray::{Array2, s};

fn initialize_fields(nx: usize, ny: usize) -> (Array2<f64>, Array2<f64>, Array2<f64>) {

let u = Array2::<f64>::zeros((nx, ny));

let v = Array2::<f64>::zeros((nx, ny));

let p = Array2::<f64>::zeros((nx, ny));

(u, v, p)

}

fn solve_momentum_equations(

u: &mut Array2<f64>, v: &mut Array2<f64>, p: &Array2<f64>,

dx: f64, dy: f64, dt: f64, nu: f64) {

let (nx, ny) = u.dim();

let u_old = u.clone();

let v_old = v.clone();

for i in 1..nx-1 {

for j in 1..ny-1 {

u[[i, j]] = u_old[[i, j]]

- dt * ((u_old[[i, j]] * (u_old[[i, j]] - u_old[[i-1, j]]) / dx)

+ (v_old[[i, j]] * (u_old[[i, j]] - u_old[[i, j-1]]) / dy))

- dt * (1.0 / dx) * (p[[i+1, j]] - p[[i, j]])

+ nu * dt * ((u_old[[i+1, j]] - 2.0 * u_old[[i, j]] + u_old[[i-1, j]]) / dx.powi(2)

+ (u_old[[i, j+1]] - 2.0 * u_old[[i, j]] + u_old[[i, j-1]]) / dy.powi(2));

v[[i, j]] = v_old[[i, j]]

- dt * ((u_old[[i, j]] * (v_old[[i, j]] - v_old[[i-1, j]]) / dx)

+ (v_old[[i, j]] * (v_old[[i, j]] - v_old[[i, j-1]]) / dy))

- dt * (1.0 / dy) * (p[[i, j+1]] - p[[i, j]])

+ nu * dt * ((v_old[[i+1, j]] - 2.0 * v_old[[i, j]] + v_old[[i-1, j]]) / dx.powi(2)

+ (v_old[[i, j+1]] - 2.0 * v_old[[i, j]] + v_old[[i, j-1]]) / dy.powi(2));

}

}

}

fn correct_pressure(

p: &mut Array2<f64>, u: &Array2<f64>, v: &Array2<f64>, dx: f64, dy: f64) {

let (nx, ny) = p.dim();

let p_old = p.clone();

for i in 1..nx-1 {

for j in 1..ny-1 {

p[[i, j]] = p_old[[i, j]]

- 0.5 * ((u[[i+1, j]] - u[[i, j]]) / dx + (v[[i, j+1]] - v[[i, j]]) / dy);

}

}

}

fn main() {

let nx = 50;

let ny = 50;

let dx = 1.0 / (nx as f64 - 1.0);

let dy = 1.0 / (ny as f64 - 1.0);

let dt = 0.001;

let nu = 0.01;

let (mut u, mut v, mut p) = initialize_fields(nx, ny);

for _ in 0..1000 {

solve_momentum_equations(&mut u, &mut v, &p, dx, dy, dt, nu);

correct_pressure(&mut p, &u, &v, dx, dy);

}

// Further processing or visualization of results

println!("{:?}", u);

println!("{:?}", v);

println!("{:?}", p);

}

This code implements the core steps of the SIMPLE algorithm to solve the Navier-Stokes equations for a 2D incompressible flow. We start by initializing the velocity components (u, v) and pressure field (p) using zero arrays, which represent a quiescent fluid at rest.

The solve_momentum_equations function is responsible for updating the velocity fields by solving the discretized momentum equations. These equations are linearized, and finite difference approximations are used to approximate the spatial derivatives. The velocity components are updated iteratively, with the pressure gradient term accounting for the effect of pressure on the velocity. The viscosity term, which accounts for the diffusion of momentum, is also included.

After updating the velocities, the correct_pressure function is called to correct the pressure field to satisfy the continuity equation (mass conservation). This involves solving a discretized pressure correction equation derived from the continuity equation, ensuring that the divergence of the velocity field is minimized, leading to a physically accurate, divergence-free flow.

The main loop runs the simulation for a specified number of time steps, iteratively solving the momentum equations and correcting the pressure field. This iterative process continues until the solution converges, meaning the changes in velocity and pressure fields become negligible.

Rust's ndarray crate is utilized for handling multi-dimensional arrays, making it easier to implement the finite difference approximations and manage the grid-based data. The ownership model of Rust ensures that the memory used for these arrays is safely managed, preventing issues like memory leaks or data races that are common in CFD simulations, particularly when parallelized.

Furthermore, Rust's potential for concurrency can be leveraged to parallelize parts of the solver, such as the loops that update the velocity and pressure fields. This can significantly improve performance, especially for larger, more complex simulations.

Overall, this implementation demonstrates how Rust can be effectively used to solve the Navier-Stokes equations in a CFD context, balancing ease of implementation with performance and safety. The SIMPLE algorithm, while relatively straightforward, serves as a powerful tool for simulating fluid flows, and Rust's features ensure that the resulting code is both efficient and robust.

13.4. Turbulence Modeling in Rust

Turbulence is a complex, chaotic phenomenon that occurs in fluid flows when the inertial forces become dominant over viscous forces. It is characterized by irregular fluctuations in velocity and pressure, creating eddies of various sizes. These fluctuations make turbulence inherently difficult to predict and model. Despite this complexity, turbulence plays a crucial role in many practical applications of Computational Fluid Dynamics (CFD), such as aerodynamics, weather prediction, and industrial process simulations. Accurately modeling turbulence is essential for capturing the detailed behavior of fluid flows in these contexts.

The challenge with turbulence lies in its wide range of spatial and temporal scales. Large eddies are energy-containing structures that interact with smaller eddies, leading to a cascade of energy down to the smallest scales where it is dissipated as heat. This multi-scale nature of turbulence makes direct simulation computationally infeasible for most practical problems, necessitating the use of turbulence models.

Several turbulence models have been developed to approximate the effects of turbulence without resolving all scales directly. The most commonly used models in CFD include Reynolds-Averaged Navier-Stokes (RANS), Large Eddy Simulation (LES), and Direct Numerical Simulation (DNS).

RANS models simplify the problem by averaging the Navier-Stokes equations over time or space, introducing additional terms called Reynolds stresses that account for the effects of turbulence. These models are computationally efficient and widely used in industrial applications, but they often sacrifice accuracy, especially in complex or highly unsteady flows.

LES models offer a middle ground by resolving the larger eddies explicitly and modeling the smaller, subgrid-scale eddies. LES provides more accurate results than RANS, particularly in flows with significant unsteadiness or large-scale turbulence structures. However, it is also more computationally demanding.

DNS represents the highest level of accuracy by directly solving the Navier-Stokes equations without any modeling, resolving all scales of turbulence. While DNS provides the most detailed results, it is prohibitively expensive in terms of computational resources and is typically used only for fundamental research in simple geometries or low Reynolds number flows.

The choice of turbulence model involves a trade-off between accuracy and computational cost. For many practical applications, RANS models are sufficient, but LES or DNS may be required when detailed resolution of the turbulent structures is necessary.

Implementing turbulence models in Rust involves leveraging its performance and safety features to handle the complex calculations required for turbulence simulations. The key is to balance the need for numerical stability with the efficiency of the computations.

Let's consider the implementation of a basic RANS model in Rust. In this example, we will use the k-ε model, which is one of the most common RANS models. The k-ε model introduces two additional transport equations: one for the turbulent kinetic energy (k) and one for the turbulent dissipation rate (ε). These equations help model the Reynolds stresses and close the system of equations.

use ndarray::Array2;

// Function to initialize k and epsilon fields

fn initialize_turbulence_fields(nx: usize, ny: usize) -> (Array2<f64>, Array2<f64>) {

let k = Array2::<f64>::from_elem((nx, ny), 0.01); // Initial guess for k

let epsilon = Array2::<f64>::from_elem((nx, ny), 0.01); // Initial guess for epsilon

(k, epsilon)

}

// Function to update the k and epsilon fields

fn update_turbulence_fields(

k: &mut Array2<f64>, epsilon: &mut Array2<f64>,

u: &Array2<f64>, v: &Array2<f64>,

dx: f64, dy: f64, dt: f64, c_mu: f64, sigma_k: f64, sigma_e: f64) {

let (nx, ny) = k.dim();

let k_old = k.clone();

let epsilon_old = epsilon.clone();

for i in 1..nx-1 {

for j in 1..ny-1 {

// Compute production term

let p_k = c_mu * ((u[[i+1, j]] - u[[i-1, j]]) / (2.0 * dx)).powi(2)

+ ((v[[i, j+1]] - v[[i, j-1]]) / (2.0 * dy)).powi(2);

// Update k

k[[i, j]] = k_old[[i, j]]

+ dt * (p_k - epsilon_old[[i, j]])

+ dt * ((k_old[[i+1, j]] - 2.0 * k_old[[i, j]] + k_old[[i-1, j]]) / dx.powi(2)

+ (k_old[[i, j+1]] - 2.0 * k_old[[i, j]] + k_old[[i, j-1]]) / dy.powi(2)) / sigma_k;

// Update epsilon

epsilon[[i, j]] = epsilon_old[[i, j]]

+ dt * (c_mu * (p_k * epsilon_old[[i, j]] / k_old[[i, j]]) - epsilon_old[[i, j]].powi(2) / k_old[[i, j]])

+ dt * ((epsilon_old[[i+1, j]] - 2.0 * epsilon_old[[i, j]] + epsilon_old[[i-1, j]]) / dx.powi(2)

+ (epsilon_old[[i, j+1]] - 2.0 * epsilon_old[[i, j]] + epsilon_old[[i, j-1]]) / dy.powi(2)) / sigma_e;

}

}

}

fn main() {

let nx = 50;

let ny = 50;

let dx = 1.0 / (nx as f64 - 1.0);

let dy = 1.0 / (ny as f64 - 1.0);

let dt = 0.001;

let c_mu = 0.09;

let sigma_k = 1.0;

let sigma_e = 1.3;

let (mut k, mut epsilon) = initialize_turbulence_fields(nx, ny);

let u = Array2::<f64>::zeros((nx, ny)); // Placeholder velocity field

let v = Array2::<f64>::zeros((nx, ny)); // Placeholder velocity field

for _ in 0..1000 {

update_turbulence_fields(&mut k, &mut epsilon, &u, &v, dx, dy, dt, c_mu, sigma_k, sigma_e);

}

// Further processing or visualization of results

println!("{:?}", k);

println!("{:?}", epsilon);

}

In this example, we first initialize the turbulent kinetic energy (k) and the turbulent dissipation rate (epsilon) fields with small positive values. These fields represent the turbulence intensity and the rate at which turbulence dissipates, respectively. The initialize_turbulence_fields function sets up these fields on a structured grid.

The update_turbulence_fields function is the core of the turbulence model implementation. It updates the k and epsilon fields based on the transport equations of the k-ε model. The production term p_k is computed using the velocity gradients, which represent the generation of turbulence due to shear in the flow. The k and epsilon fields are then updated using finite difference approximations of the spatial derivatives, similar to the method used in the Navier-Stokes solver. The constants c_mu, sigma_k, and sigma_e are empirical parameters specific to the k-ε model.

The main loop in the main function runs the simulation for a specified number of time steps, updating the turbulence fields iteratively. The velocity fields u and v are placeholders in this example, representing the flow field that would be solved alongside the turbulence model in a complete CFD simulation.

This implementation highlights how Rust's features, such as its safety and performance, can be effectively used to implement turbulence models like the k-ε model. The use of ndarray allows for efficient handling of the grid and field data, while Rust's ownership model ensures that memory is managed safely, preventing issues such as race conditions or memory leaks.

Once the turbulence model is implemented, the next step would involve integrating this model with the Navier-Stokes solver. The combined system would simulate the full turbulent flow, where the turbulence model provides closure to the Reynolds-averaged equations. Additionally, visualization of the results is crucial for analyzing turbulent flows. Rust can be integrated with visualization tools such as ParaView or VTK, either by exporting the simulation data to a compatible format or by using Rust bindings to directly interface with these tools.

This example demonstrates how Rust can be used to implement complex turbulence models in CFD, balancing computational efficiency with the need for numerical stability. The ability to model turbulence accurately is essential for capturing the detailed behavior of fluid flows in many practical applications, and Rust provides the tools needed to achieve this with both reliability and performance.

13.5. Parallel and Distributed Computing in CFD

Parallel and distributed computing are critical in Computational Fluid Dynamics (CFD) because of the immense computational resources required to solve large-scale fluid flow problems. Simulating complex fluid dynamics often involves solving nonlinear partial differential equations like the Navier-Stokes equations over a fine mesh, resulting in millions or even billions of degrees of freedom. As the complexity and size of the problem grow, single-threaded computation becomes insufficient. Parallel computing, which involves distributing computational tasks across multiple processors, and distributed computing, where tasks are spread across different nodes in a network, become essential for making these simulations feasible within a reasonable time frame.

One of the primary computational challenges in CFD is the need to balance the workload among processors efficiently while minimizing communication overhead. This balance is crucial because uneven distribution can lead to some processors being idle while others are overburdened, reducing overall efficiency. Communication between processors, especially in distributed systems, adds another layer of complexity, as it can introduce latency and synchronization issues, particularly in tightly coupled systems where data needs to be exchanged frequently.

To address these challenges, several techniques and strategies are employed in parallel and distributed CFD. Domain decomposition is one of the most common methods, where the computational domain (the fluid flow region) is divided into subdomains, each handled by a different processor. The decomposition can be done in various ways, such as block-based decomposition or strip decomposition, depending on the geometry and flow characteristics.

Load balancing is crucial to ensure that each processor has an approximately equal amount of work. This involves dynamically or statically assigning computational tasks based on the current workload. In static load balancing, the workload is distributed before the simulation begins, while dynamic load balancing adjusts the workload during the simulation to account for varying computational demands.

Communication strategies are also vital in parallel CFD, particularly in distributed computing environments. Efficient communication between processors is necessary to exchange boundary data between subdomains, update global variables, and synchronize computations. In distributed systems, this is often achieved using the Message Passing Interface (MPI), a standardized and portable message-passing system designed for parallel computing.

Rust, known for its safety and performance, is increasingly being adopted in high-performance computing (HPC) and parallel computing due to its powerful concurrency features and ability to prevent common errors like data races. Rust's ownership model, along with concurrency tools like threads and async, make it an excellent choice for implementing parallel and distributed algorithms.

Implementing parallel CFD algorithms in Rust involves using crates like Rayon for data parallelism and MPI bindings for distributed computing. Rayon simplifies parallelism by allowing developers to parallelize iterators with minimal effort, while MPI provides the tools needed to manage communication between distributed nodes.

Let's start with a simple example of using Rayon to parallelize a CFD simulation loop. Suppose we are implementing a 2D heat equation solver, and we want to parallelize the update of the temperature field.

use ndarray::Array2;

use rayon::prelude::*;

fn update_temperature_parallel(u: &mut Array2<f64>, alpha: f64, dt: f64, dx: f64, dy: f64) {

let (nx, ny) = u.dim();

let u_old = u.clone();

// Parallelizing the update using Rayon's parallel iterators

u.axis_iter_mut(ndarray::Axis(0)).into_par_iter().for_each(|mut row| {

let i = row.index();

for j in 1..ny-1 {

if i > 0 && i < nx-1 {

row[j] = u_old[[i, j]] + alpha * dt * (

(u_old[[i+1, j]] - 2.0 * u_old[[i, j]] + u_old[[i-1, j]]) / dx.powi(2) +

(u_old[[i, j+1]] - 2.0 * u_old[[i, j]] + u_old[[i, j-1]]) / dy.powi(2)

);

}

}

});

}

fn main() {

let nx = 100;

let ny = 100;

let alpha = 0.01;

let dx = 1.0 / (nx - 1) as f64;

let dy = 1.0 / (ny - 1) as f64;

let dt = 0.0001;

let mut u = Array2::<f64>::zeros((nx, ny));

// Initial conditions, e.g., setting a hot region in the grid

for _ in 0..1000 {

update_temperature_parallel(&mut u, alpha, dt, dx, dy);

}

// Output or visualization

println!("{:?}", u);

}

In this example, we use Rayon to parallelize the temperature update across rows in the 2D grid. The into_par_iter method from Rayon converts the iterator into a parallel iterator, allowing the temperature update to be computed concurrently across different rows. Each thread processes a portion of the grid, leading to significant performance improvements on multi-core systems.

For distributed computing, we can use Rust's MPI bindings to distribute the CFD simulation across multiple nodes. Here’s a basic example of initializing MPI in Rust and distributing the computation of a simple operation (such as summing up values) across multiple processors.

use mpi::traits::*;

use mpi::topology::Communicator;

fn main() {

let universe = mpi::initialize().unwrap();

let world = universe.world();

let rank = world.rank();

let size = world.size();

let local_value = rank as f64 + 1.0; // Each processor has a different value

let mut global_sum = 0.0;

// Sum up all local values into a global sum

world.all_reduce_into(&local_value, &mut global_sum, mpi::operation::Sum);

if rank == 0 {

println!("The global sum is: {}", global_sum);

}

}

In this code, MPI is initialized, and the total number of processors and each processor's rank (unique identifier) are determined. Each processor computes a local value, which is then summed across all processors using the all_reduce_into method, an MPI operation that gathers and reduces data from all processors. The result, global_sum, is available on all processors, but typically only the root processor (rank 0) would print it out.

This approach can be extended to distribute the computation of a CFD simulation. For instance, each processor could be responsible for a subdomain of the computational grid, and after updating its part of the grid, the boundary data would be exchanged with neighboring processors using MPI communication functions. This allows the simulation to scale across large clusters, reducing the computation time significantly.

Case Study: Large-Scale CFD Simulation in Rust

Consider a large-scale CFD simulation involving the flow around a complex geometry, such as an aircraft wing. The computational domain is decomposed into subdomains, each handled by a different processor. Parallelism within each subdomain is achieved using Rayon, while MPI manages communication between subdomains across different nodes.

The implementation would involve initializing MPI and distributing the grid and initial conditions among the processors. Each processor then performs the simulation on its subdomain, using Rayon to parallelize the operations within the subdomain. After each time step, the processors exchange boundary data to ensure continuity across the domain. Finally, the results from all processors are gathered and combined for post-processing and visualization.

This approach demonstrates the power of combining Rust's parallelism capabilities with distributed computing frameworks like MPI. Rust's safety and performance characteristics make it an excellent choice for large-scale CFD simulations, where efficiency, scalability, and correctness are paramount.

In summary, Rust provides the tools necessary to implement efficient parallel and distributed CFD simulations, from parallelizing simple loops with Rayon to managing complex multi-node simulations with MPI. By leveraging these tools, large-scale simulations can be executed more efficiently, enabling the study of complex fluid dynamics problems that would be infeasible on a single processor.

13.6. Visualization and Analysis of CFD Results

Visualization is a critical aspect of Computational Fluid Dynamics (CFD) as it allows researchers and engineers to interpret complex simulation data, understand flow behavior, and identify patterns that are not immediately apparent from raw numerical outputs. The primary purpose of visualizing CFD results is to convert large datasets into graphical representations that provide intuitive insights into fluid flow phenomena. Common visualization techniques in CFD include streamlines, vector plots, and contour plots.

Streamlines represent the path that a fluid particle follows, providing a visual representation of the flow direction and behavior within the fluid domain. They are particularly useful for understanding flow patterns around objects, such as airflow over an aircraft wing.

Vector plots display velocity vectors at various points in the domain, offering a snapshot of the fluid's speed and direction at those locations.

Contour plots represent scalar quantities, such as pressure or temperature, across a domain by using lines or colors to indicate regions of constant value. These plots are essential for analyzing gradients and identifying areas of interest, such as high-pressure zones or thermal hotspots.

The accuracy and resolution of these visualizations are crucial, as they directly impact the quality of the insights gained. High-resolution visualizations can reveal fine details in the flow, while low-resolution or inaccurate visualizations might obscure critical phenomena. Computational geometry plays an essential role in creating accurate visualizations by managing the mesh and flow fields used in CFD simulations.

Post-processing is the stage where raw CFD data is converted into meaningful visual outputs. This process involves extracting relevant information from the simulation, such as velocity fields, pressure distributions, and vorticity, and then applying visualization techniques to this data. The effectiveness of post-processing depends on the accuracy of the data and the resolution at which it is visualized.

Accuracy in visualization is achieved by ensuring that the computational grid or mesh used in the simulation is sufficiently refined to capture the important features of the flow. Additionally, the numerical methods used must minimize errors that could distort the visualization.

Resolution refers to the level of detail in the visual output. Higher resolution visualizations require more computational resources but can provide a more detailed and accurate representation of the flow. Conversely, lower resolution might be used for preliminary analysis or when computational resources are limited.

Computational geometry techniques are often used to generate the meshes required for CFD simulations. These meshes are critical for defining the flow field and ensuring that the visualization accurately represents the underlying physics. Tools for mesh generation and manipulation are thus integral to the visualization process.

Integrating Rust with visualization libraries and external tools is a key step in generating high-quality visualizations of CFD results. Rust’s performance and safety make it an excellent choice for handling large datasets and complex visualization tasks, and it can be integrated with various libraries and tools to enhance its capabilities.

Let's start with a simple example of generating a contour plot of pressure data from a 2D CFD simulation using Rust and the plotters crate. We assume that the pressure data has already been computed and stored in a 2D array.

use plotters::prelude::*;

use ndarray::Array2;

fn generate_contour_plot(p: &Array2<f64>, output_file: &str) -> Result<(), Box<dyn std::error::Error>> {

let (nx, ny) = p.dim();

let root = BitMapBackend::new(output_file, (600, 600)).into_drawing_area();

root.fill(&WHITE)?;

let mut chart = ChartBuilder::on(&root)

.caption("Pressure Contour", ("sans-serif", 20))

.margin(10)

.x_label_area_size(30)

.y_label_area_size(30)

.build_cartesian_2d(0..nx, 0..ny)?;

chart.configure_mesh().draw()?;

let pressure_min = p.iter().cloned().fold(f64::INFINITY, f64::min);

let pressure_max = p.iter().cloned().fold(f64::NEG_INFINITY, f64::max);

chart.draw_series(

p.indexed_iter().map(|((i, j), &value)| {

let color = HSLColor(240.0 - 240.0 * (value - pressure_min) / (pressure_max - pressure_min), 1.0, 0.5);

Rectangle::new([(i as i32, j as i32), (i as i32 + 1, j as i32 + 1)], color.filled())

})

)?;

root.present()?;

Ok(())

}

fn main() {

let nx = 100;

let ny = 100;

let mut p = Array2::<f64>::zeros((nx, ny));

// Assume p is filled with pressure data from a CFD simulation

// Here we just use a simple pattern for demonstration

for i in 0..nx {

for j in 0..ny {

p[[i, j]] = ((i as f64 - nx as f64 / 2.0).powi(2) + (j as f64 - ny as f64 / 2.0).powi(2)).sqrt();

}

}

generate_contour_plot(&p, "pressure_contour.png").expect("Failed to generate contour plot");

}

In this example, we use the plotters crate to create a contour plot of the pressure field. The generate_contour_plot function takes a 2D array p containing the pressure values and an output file name. It initializes a drawing area using BitMapBackend, which creates an image file, and then sets up the chart using ChartBuilder.

We calculate the minimum and maximum pressure values to normalize the data for color mapping. The color is then determined by mapping the pressure value to a hue in the HSL color space, with higher pressures shown in warmer colors and lower pressures in cooler colors. The pressure values are plotted as rectangles, each representing a grid cell in the CFD domain. Finally, the image is saved to the specified file.

This simple visualization allows us to see the distribution of pressure across the domain, which can reveal important flow features such as shock waves or stagnation points.

For more advanced visualizations, such as 3D vector plots or streamlines, Rust can be integrated with external tools like ParaView or VTK. These tools are designed specifically for scientific visualization and can handle more complex datasets and renderings. Rust can generate the required data files in formats like VTK, which can then be loaded into ParaView or VTK for visualization.

Automation is crucial when dealing with large datasets generated by CFD simulations. Rust can automate the post-processing tasks, such as extracting data, generating visualizations, and organizing the results.

For example, after running a series of CFD simulations with different parameters, Rust can automatically generate a series of contour plots for each parameter set and organize them into folders. This not only saves time but also ensures consistency in how the results are processed and visualized.

Rust's concurrency features can also be leveraged to parallelize post-processing tasks, especially when working with large datasets. By using Rust's async features or crates like Rayon, multiple visualizations can be generated simultaneously, further speeding up the analysis process.

In conclusion, visualization and analysis are critical components of CFD that convert raw simulation data into meaningful insights. Rust, with its powerful libraries and integration capabilities, provides a robust platform for generating high-quality visualizations, automating post-processing tasks, and ensuring the accuracy and resolution of the visual output. Whether using simple 2D plots with plotters or advanced 3D visualizations with tools like ParaView, Rust enables efficient and effective analysis of CFD results.

13.7. Conclusion

Chapter 13 encapsulates the power and precision of Computational Fluid Dynamics (CFD) when implemented using Rust. By mastering the techniques and methodologies presented in this chapter, readers will be equipped to tackle complex fluid dynamics simulations with confidence, leveraging Rust’s strengths in safety, concurrency, and performance.

Explain the process of deriving the Navier-Stokes equations from the fundamental principles of fluid mechanics. How do these equations capture the behavior of both incompressible and compressible flows? Discuss the challenges involved in solving these equations numerically and how those challenges can be addressed using Rust.

Discuss the importance of boundary conditions in CFD simulations. What are the different types of boundary conditions commonly used (e.g., Dirichlet, Neumann, Robin), and how do they affect the results of a simulation? Provide examples of how each type can be implemented in Rust, including the handling of complex geometries.

Explore the role of Rust’s ownership model in managing memory safety during CFD simulations. How does Rust’s approach to memory management compare with that of other languages traditionally used in CFD, such as C++? Discuss how Rust’s memory safety features can prevent common bugs in large-scale fluid dynamics simulations.

Conduct a comparative study of CFD implementations in Rust versus other programming languages commonly used in CFD, such as C++, Python, and Fortran. What are the key advantages and disadvantages of Rust in terms of performance, safety, and ease of use? Discuss how these factors influence the choice of Rust for CFD applications.

Compare and contrast the finite difference, finite volume, and finite element methods as they pertain to the discretization of fluid flow equations. How do the choice of method and the structure of the grid affect the accuracy, stability, and computational cost of simulations? Provide specific examples of how each method can be implemented in Rust.

Describe the steps involved in generating a computational grid or mesh for CFD simulations. How do structured and unstructured grids influence the simulation results? Discuss the advantages and disadvantages of various mesh refinement techniques and explain how to implement them in Rust for both 2D and 3D simulations.

Discuss the criteria for ensuring the stability and convergence of numerical algorithms used in CFD simulations. What are the common pitfalls that can lead to instability or divergence in a simulation? Provide a detailed explanation of how these criteria can be programmed in Rust to safeguard against such issues.

Analyze the data structures most commonly used in CFD simulations, such as arrays, vectors, and custom types. How can these data structures be optimized in Rust to handle the large datasets typically involved in CFD? Discuss how Rust’s traits and generics can be leveraged to create flexible and efficient data structures for CFD.

Discuss the Rust libraries and crates available for implementing numerical methods in CFD. How do these libraries compare with similar libraries in languages like C++ (e.g., Eigen, Blitz++) or Python (e.g., NumPy, SciPy)? Provide examples of how to use these libraries in Rust to solve common CFD problems.

Explain the SIMPLE (Semi-Implicit Method for Pressure Linked Equations) algorithm in detail. How does this algorithm handle pressure-velocity coupling in incompressible flows? Provide a step-by-step guide for implementing SIMPLE in Rust, highlighting any potential optimizations for large, complex domains.

Explain the challenges associated with solving the nonlinear Navier-Stokes equations numerically. How can these challenges be addressed using Rust’s language features? Provide strategies for managing computational precision and ensuring numerical stability in Rust-based solvers.

Provide strategies for optimizing CFD code written in Rust. How can profiling tools be used to identify performance bottlenecks? Discuss specific techniques for improving the efficiency of Rust code, such as minimizing memory allocations, optimizing loops, and using parallel processing where appropriate.

Design a case study involving the implementation of the SIMPLE algorithm for solving the Navier-Stokes equations in Rust. Identify the key challenges you would expect to encounter, such as handling complex geometries, ensuring numerical stability, and optimizing performance. Discuss how you would address these challenges using Rust’s language features.

Provide a comprehensive comparison of turbulence models used in CFD, such as RANS (Reynolds-Averaged Navier-Stokes), LES (Large Eddy Simulation), and DNS (Direct Numerical Simulation). What are the key assumptions and approximations of each model? How can these models be effectively implemented in Rust, considering both accuracy and computational cost?

Discuss the importance of turbulence modeling in practical CFD applications. How can different turbulence models be implemented in Rust to ensure numerical stability and accuracy? Provide examples of integrating Rust code with visualization tools to analyze turbulent flow data.

Explore the trade-offs between accuracy and computational cost in different turbulence models. How can Rust be used to balance these factors effectively when simulating turbulent flows? Provide case studies demonstrating the implementation of various turbulence models in Rust.

Investigate the use of parallel computing strategies in CFD simulations. How can domain decomposition and parallel processing be utilized to reduce computational time? Discuss the best practices for implementing parallelism in Rust, including an analysis of Rust’s concurrency features like Rayon and Tokio for CFD applications.

Discuss the challenges and solutions for implementing distributed computing in Rust for large-scale CFD simulations. How can Rust be integrated with MPI (Message Passing Interface) or other distributed computing frameworks to manage data across multiple processors? Provide a detailed implementation example for a CFD problem.

Examine the strategies for optimizing parallel performance and memory usage in Rust-based CFD simulations. How can Rust’s ownership and concurrency features be leveraged to enhance performance? Provide examples of load balancing techniques and their implementation in Rust.

Examine the challenges of handling large datasets in CFD simulations, particularly in terms of memory management and computational efficiency. How can Rust’s concurrency model be leveraged to process these datasets effectively? Discuss strategies for parallelizing data processing tasks and managing memory in large-scale simulations.

Design a case study involving a large-scale CFD simulation implemented in Rust. Identify the key challenges you would expect to encounter, such as handling complex geometries, ensuring numerical stability, and optimizing performance. Discuss how you would address these challenges using Rust’s language features.

Explore the various techniques available for visualizing CFD results, including both 2D and 3D visualizations. How can Rust be used in conjunction with visualization tools like ParaView, Matplotlib, or VTK to create detailed and accurate representations of fluid flow? Provide examples of how to integrate Rust with these tools.

Explain the importance of post-processing in CFD simulations. What are the typical post-processing tasks (e.g., calculating flow rates, analyzing turbulence, extracting streamlines), and how can they be automated using Rust? Discuss the role of Rust’s standard library and third-party crates in performing these tasks efficiently.

Discuss the importance of accuracy and resolution in visualizing complex fluid flows. How can computational geometry be utilized in Rust to enhance mesh and flow field visualization? Provide best practices for ensuring that visualizations accurately represent simulation data.

Provide strategies for automating post-processing and visualization tasks in Rust. How can Rust’s concurrency and efficiency be leveraged to streamline the generation of high-quality visualizations for large CFD datasets? Include examples of integrating Rust with external visualization tools to enhance workflow.

By engaging with these questions, you’ll develop a deep comprehension of CFD principles and gain the skills necessary to tackle complex simulations with confidence. Let this journey into CFD and Rust inspire you to push the boundaries of computational physics and innovate in your future projects.

13.7.2. Assignments for Practice

Each exercise is structured to build your skills progressively, starting from fundamental implementations to more complex, performance-oriented tasks. Through these exercises, you will not only improve your coding proficiency in Rust but also enhance your ability to solve real-world CFD problems with efficiency and precision.

Exercise 13.1: Navier-Stokes Equations Implementation

Implement the Navier-Stokes equations for both incompressible and compressible flows in Rust. Begin by deriving the equations from basic fluid mechanics principles, and then translate them into a Rust implementation. Ensure that your implementation can handle different boundary conditions. Test the stability and accuracy of your solver by applying it to a simple flow problem, such as flow in a channel or around a cylinder. Analyze the results and discuss any numerical instabilities encountered.

Exercise 13.2: Finite Volume Method Application

Develop a simple CFD solver using the finite volume method in Rust. Start by selecting a 2D problem, such as the flow over an airfoil or in a cavity. Discretize the governing equations using the finite volume approach, and implement the solver in Rust. Experiment with different grid resolutions and compare the accuracy of your results. Optimize your code to improve computational efficiency, and document the performance improvements observed.

Exercise 13.3: Turbulence Modeling with LES

Implement a Large Eddy Simulation (LES) model for a turbulent flow problem in Rust. Begin by selecting a relevant flow problem, such as turbulent flow in a pipe or over a flat plate. Implement the LES model in Rust, focusing on the subgrid-scale modeling and the numerical discretization. Run simulations for different Reynolds numbers and analyze the impact of the turbulence model on the flow structure. Discuss the challenges and limitations of LES and how they might be mitigated.

Exercise 13.4: Parallel Computing in CFD

Take a complex 3D CFD problem, such as the simulation of airflow over a complex geometry, and implement a parallel solution in Rust. Use Rust’s concurrency features or integrate with parallel computing libraries like MPI. Begin by partitioning the domain and distributing the computational workload across multiple processors. Analyze the speedup and efficiency gained from parallelization. Discuss the challenges faced during implementation, such as communication overhead and load balancing, and propose solutions to address them.

Exercise 13.5: CFD Post-Processing Automation

Create a Rust program that automates the post-processing of CFD simulation data. Your task is to extract key flow characteristics such as velocity profiles, pressure distributions, and vorticity fields from the simulation results. Integrate your program with a visualization tool (e.g., Paraview) to generate plots and 3D visualizations. Experiment with different methods for filtering and smoothing the data to enhance visualization clarity. Evaluate the effectiveness of your post-processing routines in conveying the essential features of the flow.

The knowledge and experience you gain from these exercises will equip you to tackle complex fluid dynamics problems with confidence, pushing the boundaries of what you can achieve in both computational physics and software development. Keep pushing your limits, and remember that each challenge you overcome is a step closer to becoming an expert in the field.

Comments